According to Wikipedia, “Gradient descent is a first-order iterative optimization algorithm for finding local minima of a differentiable function“. Sounds a lot, right? In this article, let’s get acquainted with the Gradient descent algorithm in the most straightforward (and ‘simplest’) way. Before we continue with understanding the ABCDs of Gradient descent (and dig into the…

Category: Maths

Inside neural networks.

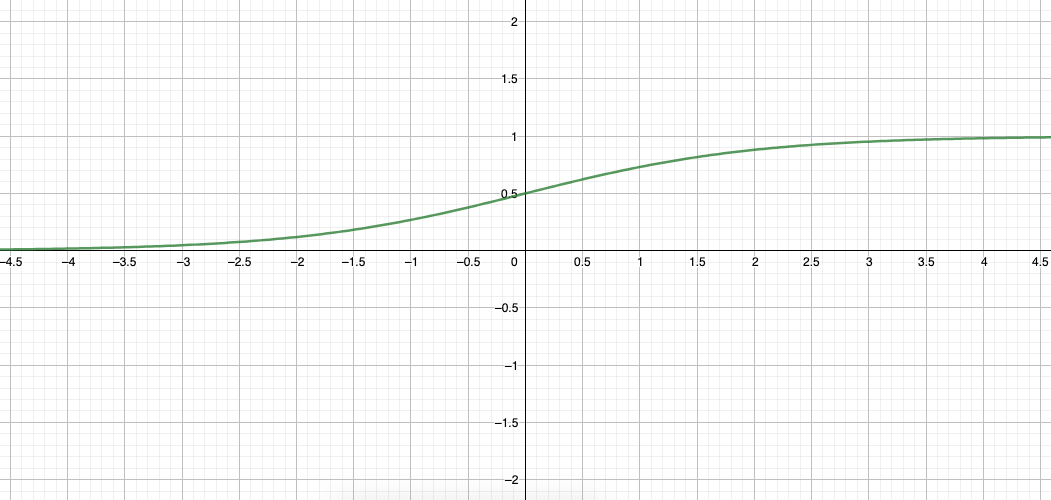

Derivative of Sigmoid Function

In this article, we’ll find the derivative of Sigmoid Function. The Sigmoid Function is one of the non-linear functions that is used as an activation function in neural networks.

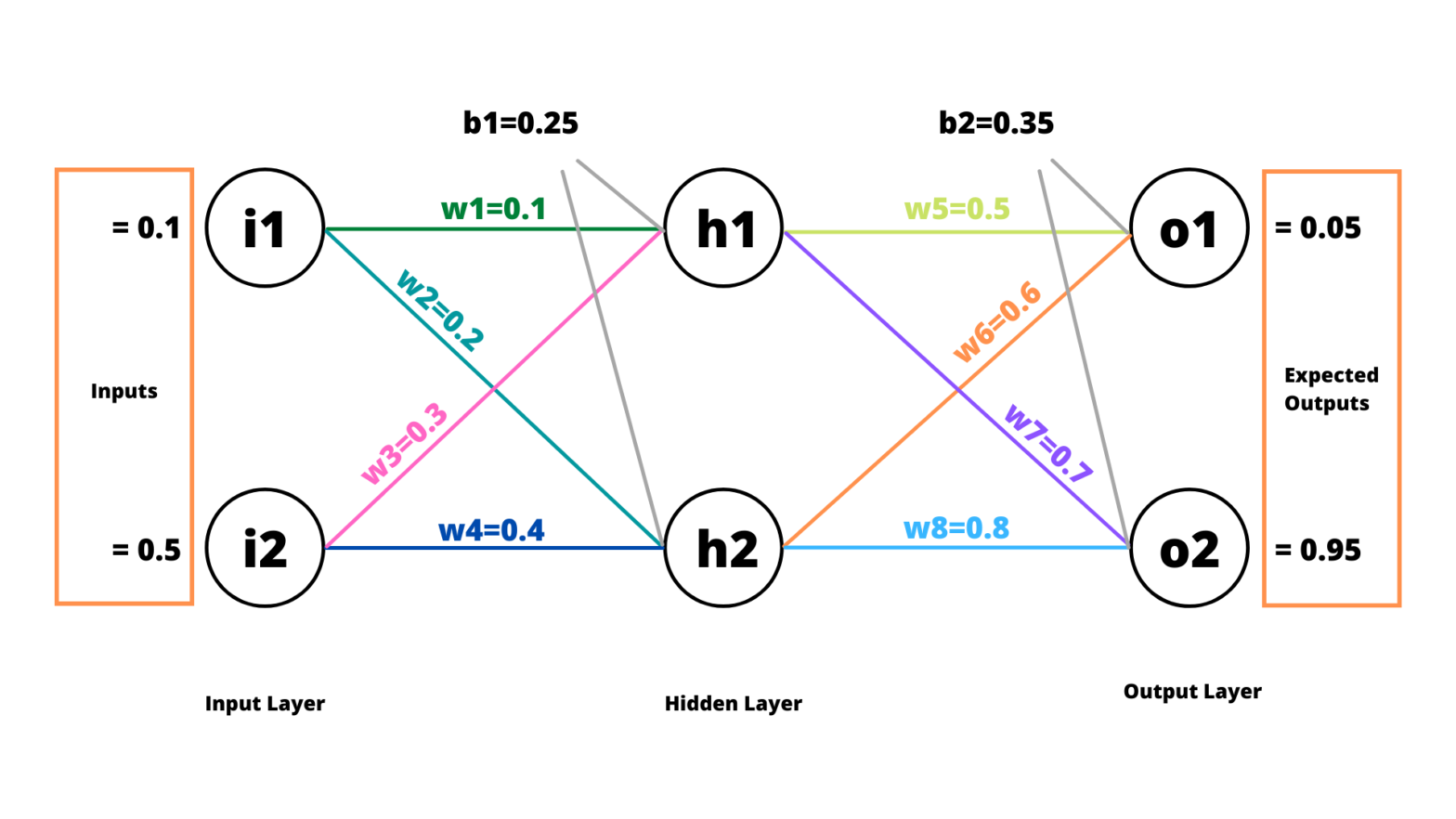

A step by step forward pass and backpropagation example

In this article, we’ll see a step by step forward pass (forward propagation) and backward pass (backpropagation) example. We’ll be taking a single hidden layer neural network and solving one complete cycle of forward propagation and backpropagation.

Mathematical concepts behind Norms in Linear algebra

This article is a beginner guide to norms from linear algebra. The commonly used norms: L1 norm, L2 norm, max-norm and Frobenius norm are also discussed.

Basic Operations on Tensors

In this article, we’ll see the basic operations (Addition, Broadcasting, Multiplication, Transpose, Inverse) that can be performed on tensors.

Scalars, Vectors, Matrices and Tensors

This article discusses the fundamentals of linear algebra: scalars, vector, matrices and tensors. The real-world use case of tensors is briefly introduced.